Changes in how project results are provided to the client.

EPI Question of the Month:

This Question-of-the-Month is about data delivery, data security, company policies and procedures, and most of all, making information available for its intended use as quickly as possible.

As the last step in the chain, what do managing all of these require to move testing & analysis results to productive applications, particularly in the current environment of big data?

“Final report writing or analysis formatting is the last step to move test data and analysis to a commercial product. Based on many years of experience, this step is often taken for granted. It’s frequently just assumed that the data from tests and analyses get to a commercial product; but indeed, it doesn’t “just happen.” Recently, the emphasis on big data draws attention to the essential step of making data available in a user-friendly easily retrieval manner.

Geo-related data (lithology, mechanical/physical properties, in-situ stress, formation pressure etc) range from processed analog information from sensors that are converted to digital form, to qualitative observations of phenomena. Reliable information starts with the processing of sensor signals and requires careful archiving including provenance, calibrations, upsets, and procedures for generating and acquiring those data. That’s why input from the entire team is involved in this last step. “Bridging” between the test and analysis engineers and scientists and the end user clients is required. Quality control, data confidentiality, delivery schedules were always critical, and are even more so in the era of “big data.”

Over my experience of three decades managing and presenting geo-related data has evolved substantially. Initially, hard copy reports were prepared with appended analog presentations of data. Occasionally, an analyst made visual picks from charts. The data were usually summarized in a tabular form to emphasize key or anomalous behavior. We moved from this age of the IBM typewriter to prototype and progressively more sophisticated spreadsheets and word processing. This evolution offered improved hard copy reports and greatly facilitated rudimentary interpretive activities. Sharing and transmission of data was still laborious requiring mainly hard copies. Bandwidth was low; encryption was very basic and generally unnecessary, and transfer protocols were painful.

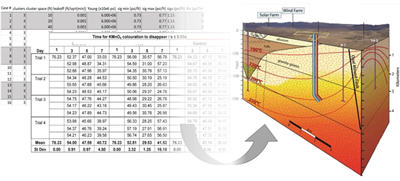

Lengthy data in flat files can now be provided to a client online, in color, and as 3D diagrams.

With time, electronic delivery of data developed, led by parallel developments of electronic transfer capabilities. Cost constraints favored transfer of tabulated information in flat files, with relational databases used only to a restricted extent. The transfer of data in this form, with implications for multi-million dollar financial decisions, caused quality assurance and data delivery security to become prime considerations.

What is the next frontier? Possibly the re-use of past data. As an example, miles and miles of rock core test data exist from past decades. That information may be available electronically, but not in a user-friendly and easily retrievable form. The bulk of these data has likely been underutilized if utilized at all. The volume of such data and the expenditures that would be required have precluded previous interpretation or even trend assessments. However, advanced computational capabilities using AI and machine learning are now aiming at using such data for modern oil/gas play developments, enhanced recovery assessments, and for vetting investment opportunities.

The opportunities for future data utilization are unfathomable. But, it’s still all about making information available as quickly as possible. That’s what “big data” is all about. What goes around, comes around...“

Sherri has extensive experience reporting and presenting rock data and analysis to end users. This requires bridging between engineers & scientists and end users while considering quality control, data management, technical writing, budget considerations, data confidentiality, delivery schedules, and other issues. For additional discussion she can be reached at sheroux @ epirecovery.com